Logstash is an open-source data processing pipeline that ingests data from various sources, transforms it, and then sends it to a destination of your choice, typically Elasticsearch, for storage and analysis. Logstash is part of the Elastic Stack, along with Elasticsearch and Kibana, and is commonly used for log and event data collection and processing.

Capabilities of Logstash include:

• Data Ingestion: Logstash supports various input plugins to ingest data from different sources such as files, databases, message queues, and network protocols.

• Data Transformation: Logstash provides a wide range of filter plugins for transforming and enriching data. These filters can perform tasks like parsing, grokking, geoip lookup, anonymization, and more.

• Data Output: Logstash supports various output plugins to send processed data to different destinations such as Elasticsearch, Amazon S3, Kafka, and many others.

• Pipeline Processing: Logstash allows you to define complex data processing pipelines with multiple stages for ingestion, transformation, and output.

• Scalability: Logstash can be scaled horizontally to handle large volumes of data by distributing workload across multiple instances.

Logstash Service Architecture

Logstash processes logs from different servers and data sources and it behaves as the shipper. The shippers are used to collect the logs and these are installed in every input source. Brokers like Redis, Kafka or RabbitMQ are buffers to hold the data for indexers, there may be more than one broker as failed instances.

Indexers like Lucene are used to index the logs for better search performance and then the output is stored in Elasticsearch or other output destination. The data in output storage is available for Kibana and other visualization software.

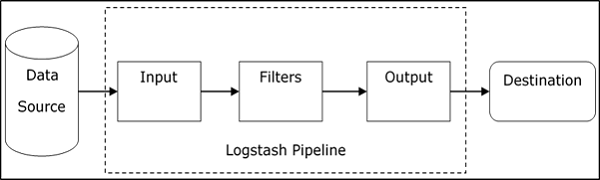

Logstash Internal Architecture

The Logstash pipeline consists of three components Input, Filters and Output. The input part is responsible to specify and access the input data source such as the log folder of the Apache Tomcat Server.

Common Syntax

The Logstash configuration file just copies the data from the inlog.log file using the input plugin and flushes the log data to outlog.log file using the output plugin.

Syntax:

file {

path => "C:/tpwork/logstash/bin/log/inlog.log"

}

}

output {

file {

path => "C:/tpwork/logstash/bin/log/outlog.log"

}

}

Cmd Run Logstash

Logstash uses –f option to specify the config file.

Problem Statement:

There was a need to synchronize various tables in Elasticsearch to enable search functionality. However, each time a new index had to be created, it required writing custom code, which was time-consuming and often tedious.

Solution:

Thanks to Logstash, this process can now be accomplished quickly and easily. Logstash allows us to sync multiple tables effortlessly through its pipeline configuration.

EXPLAINING SAMPLE CONFIG

Now let’s break down the provided Logstash configuration:

jdbc {

jdbc_driver_library => "\path\mysql-connector-j-8.1.0\mysql-connector-j-8.1.0.jar"

jdbc_driver_class => "com.mysql.cj.jdbc.Driver"

jdbc_connection_string => "jdbc:mysql://localhost:3306/database"

jdbc_user => "root"

jdbc_password => "root"

schedule => "*/2 * * * *"

statement => "SELECT * FROM table_name

WHERE id > :sql_last_value limit 100; "

use_column_value => true

tracking_column => "id"

tracking_column_type => "numeric"

last_run_metadata_path => "\path\z_index_one.txt"

tags => ["table_name"]

jdbc_default_timezone => "UTC"

}

}

output {

if "table_name" in [tags] {

elasticsearch {

hosts => ["http://localhost:9200/"]

index => "index_name"

document_id => "%{id}"

}

}

}

Output:

Specifies the input source for Logstash. In this case, it’s using the jdbc input plugin, which allows Logstash to query a JDBC database.

- jdbc_driver_library: Path to the JDBC driver library.

- jdbc_driver_class: JDBC driver class name.

- jdbc_connection_string: JDBC connection string to the database.

- jdbc_user: Username for database authentication.

- jdbc_password: Password for database authentication.

- schedule: Cron expression defining how often the SQL query should be executed.

- statement: SQL query to execute. :sql_last_value is a Logstash parameter used for incremental fetching.

- use_column_value: Specifies whether to use the last value of the tracking column.

- tracking_column: Column used for tracking incremental changes.

- tracking_column_type: Data type of the tracking column.

- last_run_metadata_path: Path to the file where Logstash stores metadata about the last execution.

- tags: Additional metadata attached to events generated by this input.

Output: Specifies the destination for processed data. In this case, it’s using the Elasticsearch output plugin to send data to Elasticsearch.

- if: Conditional statement to filter events based on their tags.

- elasticsearch: Specifies Elasticsearch as the destination.

- hosts: Elasticsearch cluster URLs.

- index: Index name where data will be stored.

- document_id: Document ID to use when storing data. Here, it’s using the id field from the incoming data.

Hello. excellent job. I did not anticipate this. This is a excellent story. Thanks!

Heard a lot about w88asia and decided to check it out. Pretty good selection of Asian games and sports betting too. Seems like a decent platform so far. Check it out: w88asia

Alright, 88clbgame, heard some buzz ’round town. Gave it a whirl, pretty decent selection and the bonuses weren’t bad. Nothing crazy, but solid for a chill night in. Gonna check back later to see if they update the games. Check it out yourself: 88clbgame

Hello, you used to write excellent, but the last few posts have been kinda boring?K I miss your super writings. Past few posts are just a bit out of track! come on!

Hey all, Coin777 has been on my radar lately. I really like the platform. Definitely worth checking out for some casual fun. coin777

You can definitely see your enthusiasm in the work you write. The world hopes for even more passionate writers like you who are not afraid to say how they believe. Always follow your heart.

Thank you so much for giving everyone an extremely splendid possiblity to read in detail from here. It is usually very awesome and jam-packed with a good time for me and my office friends to visit your web site really thrice per week to learn the newest tips you will have. And definitely, I am also certainly astounded with the impressive guidelines you give. Selected 3 points on this page are certainly the most efficient we have ever had.

Thanks for the update, how can I make is so that I receive an email sent to me whenever you write a fresh post?

My spouse and i have been absolutely ecstatic that John managed to round up his analysis via the precious recommendations he made from your web pages. It’s not at all simplistic to just choose to be giving freely guidelines which often the others have been trying to sell. And we keep in mind we now have you to appreciate because of that. All of the explanations you’ve made, the straightforward blog menu, the friendships your site aid to instill – it’s got mostly incredible, and it’s really helping our son and the family know that this issue is exciting, which is certainly rather important. Thanks for everything!

Fantastic blog! Do you have any tips for aspiring writers? I’m planning to start my own website soon but I’m a little lost on everything. Would you advise starting with a free platform like WordPress or go for a paid option? There are so many options out there that I’m totally confused .. Any tips? Bless you!

It is appropriate time to make some plans for the future and it is time to be happy. I have read this post and if I could I wish to suggest you few interesting things or tips. Maybe you can write next articles referring to this article. I wish to read even more things about it!

I think you have remarked some very interesting details , regards for the post.

Perfectly indited written content, thank you for entropy. “In the fight between you and the world, back the world.” by Frank Zappa.

I have not checked in here for some time as I thought it was getting boring, but the last few posts are great quality so I guess I will add you back to my everyday bloglist. You deserve it my friend 🙂

You made certain good points there. I did a search on the subject and found most persons will consent with your blog.

Those are yours alright! . We at least need to get these people stealing images to start blogging! They probably just did a image search and grabbed them. They look good though!

Terrific paintings! That is the kind of info that are supposed to be shared across the web. Disgrace on the seek engines for no longer positioning this publish upper! Come on over and visit my web site . Thanks =)

You should take part in a contest for one of the best blogs on the web. I will recommend this site!

This is the proper blog for anyone who desires to find out about this topic. You understand a lot its almost laborious to argue with you (not that I really would need…HaHa). You definitely put a new spin on a topic thats been written about for years. Great stuff, just great!

I¦ve read several just right stuff here. Definitely price bookmarking for revisiting. I wonder how much effort you set to make one of these great informative site.

Very clean web site, appreciate it for this post.

I reckon something truly special in this web site.

It is really a nice and helpful piece of information. I am glad that you shared this useful info with us. Please keep us up to date like this. Thank you for sharing.

Normally I do not read article on blogs, but I wish to say that this write-up very forced me to try and do so! Your writing style has been surprised me. Thank you, quite great article.

Hi! I just wanted to ask if you ever have any trouble with hackers? My last blog (wordpress) was hacked and I ended up losing several weeks of hard work due to no back up. Do you have any solutions to stop hackers?

I haven?¦t checked in here for a while as I thought it was getting boring, but the last several posts are great quality so I guess I will add you back to my daily bloglist. You deserve it my friend 🙂

You made some nice points there. I did a search on the subject and found most guys will consent with your site.

I love your blog.. very nice colors & theme. Did you create this website yourself? Plz reply back as I’m looking to create my own blog and would like to know wheere u got this from. thanks

Hello! I could have sworn I’ve been to this blog before but after browsing through some of the post I realized it’s new to me. Anyways, I’m definitely happy I found it and I’ll be book-marking and checking back frequently!

Hi there just wanted to give you a quick heads up. The words in your post seem to be running off the screen in Safari. I’m not sure if this is a format issue or something to do with internet browser compatibility but I thought I’d post to let you know. The style and design look great though! Hope you get the issue solved soon. Cheers

Heya i am for the first time here. I found this board and I find It truly useful & it helped me out a lot. I’m hoping to offer one thing back and aid others like you helped me.

I’ve been browsing online more than three hours today, yet I never found any fascinating article like yours. It is pretty value sufficient for me. In my view, if all webmasters and bloggers made good content material as you did, the internet shall be much more useful than ever before. “Wherever they burn books, they will also, in the end, burn people.” by Heinrich Heine.

Hey, you used to write great, but the last few posts have been kinda boring… I miss your tremendous writings. Past few posts are just a little out of track! come on!

Hello! This post could not be written any better! Reading through this post reminds me of my good old room mate! He always kept chatting about this. I will forward this article to him. Pretty sure he will have a good read. Thank you for sharing!

I am not rattling good with English but I come up this real easy to translate.

There is noticeably a bundle to know about this. I assume you made certain nice points in features also.

I know this if off topic but I’m looking into starting my own weblog and was wondering what all is needed to get setup? I’m assuming having a blog like yours would cost a pretty penny? I’m not very web savvy so I’m not 100 sure. Any tips or advice would be greatly appreciated. Thanks

Rattling wonderful visual appeal on this internet site, I’d rate it 10 10.

Hello.This article was really remarkable, particularly because I was searching for thoughts on this subject last Monday.

This actually answered my drawback, thank you!

I have been browsing online greater than three hours these days, yet I by no means discovered any interesting article like yours. It is pretty worth enough for me. In my opinion, if all webmasters and bloggers made just right content as you probably did, the internet will probably be much more helpful than ever before.

Heya i am for the primary time here. I came across this board and I find It really useful & it helped me out a lot. I’m hoping to present one thing back and help others such as you helped me.

As I website owner I believe the content here is very superb, thanks for your efforts.

Way cool, some valid points! I appreciate you making this article available, the rest of the site is also high quality. Have a fun.

I’m very happy to read this. This is the type of manual that needs to be given and not the random misinformation that is at the other blogs. Appreciate your sharing this greatest doc.

Some truly interesting details you have written.Helped me a lot, just what I was searching for : D.

You made some decent points there. I looked on the internet for the subject matter and found most guys will consent with your blog.

I truly appreciate this post. I have been looking everywhere for this! Thank goodness I found it on Bing. You’ve made my day! Thank you again

Real clean web site, thankyou for this post.

You have noted very interesting details ! ps decent site.

With havin so much content and articles do you ever run into any problems of plagorism or copyright violation? My site has a lot of unique content I’ve either created myself or outsourced but it seems a lot of it is popping it up all over the web without my permission. Do you know any solutions to help stop content from being stolen? I’d definitely appreciate it.

Hey, you used to write excellent, but the last several posts have been kinda boringK I miss your great writings. Past several posts are just a little out of track! come on!

I have been surfing online more than three hours nowadays, but I never found any interesting article like yours. It is lovely price enough for me. Personally, if all website owners and bloggers made good content as you probably did, the web will probably be a lot more useful than ever before. “No nation was ever ruined by trade.” by Benjamin Franklin.

Wow! Thank you! I continuously wanted to write on my website something like that. Can I implement a portion of your post to my blog?

What i don’t understood is in reality how you’re not actually much more neatly-appreciated than you may be right now. You’re very intelligent. You recognize therefore considerably in relation to this matter, produced me individually imagine it from a lot of numerous angles. Its like women and men don’t seem to be involved except it’s one thing to do with Woman gaga! Your individual stuffs excellent. Always maintain it up!

I’m no longer positive where you’re getting your information, however great topic. I needs to spend a while finding out much more or understanding more. Thank you for excellent information I was looking for this info for my mission.

Great post and straight to the point. I don’t know if this is actually the best place to ask but do you people have any ideea where to hire some professional writers? Thanks in advance 🙂

My partner and I stumbled over here by a different web address and thought I may as well check things out. I like what I see so now i am following you. Look forward to looking into your web page repeatedly.

There are some attention-grabbing deadlines on this article but I don’t know if I see all of them center to heart. There’s some validity but I’ll take hold opinion till I look into it further. Good article , thanks and we want more! Added to FeedBurner as properly

Some truly interesting information, well written and broadly speaking user genial.

I have recently started a web site, the information you offer on this website has helped me tremendously. Thank you for all of your time & work.

I was recommended this website through my cousin. I’m now not sure whether or not this post is written by him as no one else recognize such particular approximately my trouble. You are amazing! Thank you!

I consider something really special in this web site.

We’re a group of volunteers and opening a new scheme in our community. Your site offered us with valuable info to work on. You have done an impressive job and our whole community will be thankful to you.

Interesting analysis! The psychology of choosing numbers is fascinating, mirroring how platforms like jl7777 apk offer diverse game choices. Secure registration & easy access are key for enjoyable play! 🤔

I would like to show appreciation to you just for rescuing me from this type of matter. As a result of researching throughout the search engines and finding solutions which are not beneficial, I assumed my life was over. Existing minus the solutions to the difficulties you have fixed through the guideline is a crucial case, as well as those that would have negatively damaged my entire career if I hadn’t come across your web page. That capability and kindness in controlling all the stuff was tremendous. I don’t know what I would’ve done if I had not come upon such a solution like this. I’m able to at this point look forward to my future. Thanks so much for the high quality and amazing guide. I won’t think twice to recommend your web blog to any individual who would need guide about this area.

Hello there, simply became aware of your weblog via Google, and found that it is truly informative. I’m going to watch out for brussels. I will be grateful if you happen to proceed this in future. Numerous other people will likely be benefited from your writing. Cheers!

Howdy! I simply wish to give an enormous thumbs up for the nice data you’ve got here on this post. I will be coming back to your weblog for extra soon.

I truly appreciate this post. I¦ve been looking everywhere for this! Thank goodness I found it on Bing. You’ve made my day! Thanks again

Awesome site you have here but I was curious if you knew of any community forums that cover the same topics talked about in this article? I’d really love to be a part of online community where I can get responses from other knowledgeable individuals that share the same interest. If you have any recommendations, please let me know. Appreciate it!

Alright, so I was trying wk7777 and lets just say you win some you loose some. Overall its good entertainment and keeps you on the edge of your seat. Take a look wk7777.

Heard about jili88phcomapp? Gave it a try, and it’s alright! The app runs smoothly, which is a huge plus. If you’re looking for convenience, this might be your spot. Learn more here: jili88phcomapp

id888casino, eh? Not bad! Decent selection, good user experience. Might be a good choice for your chill nights. Check it out for yourself: id888casino

I am often to blogging and i really appreciate your content. The article has really peaks my interest. I am going to bookmark your site and keep checking for new information.

whoah this blog is great i love reading your articles. Keep up the good work! You know, lots of people are hunting around for this information, you could aid them greatly.

What’s Going down i am new to this, I stumbled upon this I have discovered It absolutely helpful and it has helped me out loads. I’m hoping to give a contribution & assist other users like its aided me. Great job.

This is a topic close to my heart cheers, where are your contact details though?

I don’t ordinarily comment but I gotta state regards for the post on this one : D.

you have a great blog here! would you like to make some invite posts on my blog?

I’m often to running a blog and i really recognize your content. The article has really peaks my interest. I’m going to bookmark your website and keep checking for new information.

I like what you guys are up too. Such smart work and reporting! Keep up the excellent works guys I have incorporated you guys to my blogroll. I think it’ll improve the value of my site 🙂

Alright, 7e777game, you’ve got my attention. Let’s see if you can keep it. Exploring it now. Hoping for some epic gameplay! See what 7e777game is about 7e777game

Yo, getting hyped about y999apk. Downloading my games has never been easier. It’s quick, simple, just the way i like it. Download yours y999apk

Just stumbled upon x666game. Fingers crossed for some hidden gems in here! Can’t wait to start playing. Check them out x666game

I was reading through some of your content on this website and I conceive this internet site is very informative ! Keep on putting up.

Thanx for the effort, keep up the good work Great work, I am going to start a small Blog Engine course work using your site I hope you enjoy blogging with the popular BlogEngine.net.Thethoughts you express are really awesome. Hope you will right some more posts.

I gotta favorite this site it seems very useful very helpful

Woah! I’m really enjoying the template/theme of this site. It’s simple, yet effective. A lot of times it’s very hard to get that “perfect balance” between user friendliness and visual appearance. I must say you’ve done a very good job with this. Also, the blog loads extremely fast for me on Chrome. Excellent Blog!

I’m really enjoying the design and layout of your blog. It’s a very easy on the eyes which makes it much more pleasant for me to come here and visit more often. Did you hire out a designer to create your theme? Great work!

Yay google is my world beater aided me to find this great website ! .

Hiya, I am really glad I’ve found this info. Today bloggers publish just about gossips and net and this is actually annoying. A good blog with interesting content, this is what I need. Thank you for keeping this website, I’ll be visiting it. Do you do newsletters? Cant find it.

Oh my goodness! an incredible article dude. Thanks However I am experiencing difficulty with ur rss . Don’t know why Unable to subscribe to it. Is there anyone getting similar rss downside? Anyone who knows kindly respond. Thnkx

Woah! I’m really digging the template/theme of this site. It’s simple, yet effective. A lot of times it’s very difficult to get that “perfect balance” between superb usability and visual appeal. I must say you have done a very good job with this. Also, the blog loads super quick for me on Firefox. Outstanding Blog!

Some truly wondrous work on behalf of the owner of this web site, dead outstanding subject material.

There are actually a whole lot of details like that to take into consideration. That could be a nice point to convey up. I supply the ideas above as basic inspiration however clearly there are questions like the one you deliver up where the most important thing will likely be working in honest good faith. I don?t know if finest practices have emerged around issues like that, but I’m positive that your job is clearly recognized as a good game. Each boys and girls really feel the affect of just a second’s pleasure, for the remainder of their lives.

I have been checking out a few of your articles and it’s pretty nice stuff. I will definitely bookmark your site.

Please let me know if you’re looking for a author for your blog. You have some really good posts and I feel I would be a good asset. If you ever want to take some of the load off, I’d really like to write some articles for your blog in exchange for a link back to mine. Please blast me an e-mail if interested. Thanks!

I wanted to thank you for this great read!! I definitely enjoying every little bit of it I have you bookmarked to check out new stuff you post…

Its fantastic as your other posts : D, thanks for posting.

I loved up to you will receive carried out proper here. The sketch is attractive, your authored subject matter stylish. however, you command get bought an nervousness over that you would like be turning in the following. sick undoubtedly come more until now once more as exactly the same just about a lot ceaselessly inside of case you protect this increase.

Heya i am for the first time here. I came across this board and I find It truly useful & it helped me out a lot. I hope to give something back and aid others like you aided me.

Sup people, quick shoutout to 92gamelogin. Getting logged into your games has never been easier. Check it out if you’re tired of forgetting passwords: 92gamelogin

Whats good, folks? Been messing around with mwingame and its not half bad. Gives you a few good hours of entertainment. Get it here: mwingame

Pakaviatorgame… flying high! This game is addictive, man. Waiting for that big multiplier! Worth a shot if you like quick games. pakaviatorgame.

I’ve been browsing on-line more than three hours nowadays, yet I by no means discovered any attention-grabbing article like yours. It¦s beautiful worth enough for me. Personally, if all site owners and bloggers made good content as you probably did, the internet will probably be much more helpful than ever before.

Can I just say what a relief to find someone who actually knows what theyre talking about on the internet. You definitely know how to bring an issue to light and make it important. More people need to read this and understand this side of the story. I cant believe youre not more popular because you definitely have the gift.

You should take part in a contest for one of the best blogs on the web. I will recommend this site!

Yay google is my world beater assisted me to find this great website ! .

I love your writing style genuinely enjoying this internet site.

Some genuinely select posts on this internet site, saved to favorites.

I like what you guys are up too. Such clever work and reporting! Keep up the superb works guys I have incorporated you guys to my blogroll. I think it will improve the value of my website 🙂

It is truly a great and helpful piece of information. I am happy that you simply shared this helpful info with us. Please stay us up to date like this. Thanks for sharing.

You are my breathing in, I have few blogs and sometimes run out from brand :). “He who controls the past commands the future. He who commands the future conquers the past.” by George Orwell.

Nice blog! Is your theme custom made or did you download it from somewhere? A design like yours with a few simple adjustements would really make my blog shine. Please let me know where you got your theme. Thanks

Your style is so unique compared to many other people. Thank you for publishing when you have the opportunity,Guess I will just make this bookmarked.2

Simply a smiling visitor here to share the love (:, btw great style. “Reading well is one of the great pleasures that solitude can afford you.” by Harold Bloom.

As I site possessor I believe the content matter here is rattling magnificent , appreciate it for your efforts. You should keep it up forever! Best of luck.

Hey very cool website!! Man .. Beautiful .. Amazing .. I’ll bookmark your site and take the feeds also…I am happy to find so many useful information here in the post, we need work out more strategies in this regard, thanks for sharing. . . . . .

I used to be more than happy to find this web-site.I needed to thanks in your time for this wonderful read!! I definitely having fun with every little bit of it and I’ve you bookmarked to check out new stuff you blog post.

What¦s Taking place i’m new to this, I stumbled upon this I’ve discovered It absolutely useful and it has helped me out loads. I hope to contribute & assist other customers like its helped me. Great job.

certainly like your web site however you need to check the spelling on several of your posts. Several of them are rife with spelling issues and I in finding it very bothersome to tell the truth nevertheless I?¦ll surely come again again.

I have been examinating out many of your stories and i can claim pretty nice stuff. I will surely bookmark your site.

Howdy, i read your blog from time to time and i own a similar one and i was just curious if you get a lot of spam comments? If so how do you reduce it, any plugin or anything you can advise? I get so much lately it’s driving me insane so any help is very much appreciated.

Write more, thats all I have to say. Literally, it seems as though you relied on the video to make your point. You definitely know what youre talking about, why waste your intelligence on just posting videos to your blog when you could be giving us something enlightening to read?

Absolutely written content material, Really enjoyed looking at.

Fantastic beat ! I wish to apprentice while you amend your website, how can i subscribe for a blog site? The account helped me a acceptable deal. I had been a little bit acquainted of this your broadcast provided bright clear idea

I’ve been surfing online more than 3 hours today, yet I never found any interesting article like yours. It’s pretty worth enough for me. In my view, if all web owners and bloggers made good content as you did, the internet will be much more useful than ever before.

Thanks for some other informative website. Where else may I get that kind of info written in such a perfect approach? I’ve a venture that I am simply now operating on, and I’ve been on the look out for such information.

I like this weblog very much, Its a very nice spot to read and get information. “The love of nature is consolation against failure.” by Berthe Morisot.

Hey There. I discovered your weblog the usage of msn. That is a really neatly written article. I’ll be sure to bookmark it and come back to learn more of your helpful info. Thank you for the post. I will definitely return.

Excellent blog here! Also your site loads up very fast! What host are you using? Can I get your affiliate link to your host? I wish my web site loaded up as fast as yours lol

Unquestionably believe that which you stated. Your favorite reason seemed to be at the internet the easiest thing to be aware of. I say to you, I certainly get irked while other people consider issues that they plainly do not realize about. You controlled to hit the nail upon the highest as well as outlined out the whole thing without having side effect , other people can take a signal. Will likely be again to get more. Thanks

Hmm is anyone else experiencing problems with the pictures on this blog loading? I’m trying to find out if its a problem on my end or if it’s the blog. Any suggestions would be greatly appreciated.

Very efficiently written article. It will be supportive to anyone who usess it, as well as yours truly :). Keep doing what you are doing – looking forward to more posts.

Definitely, what a magnificent site and informative posts, I will bookmark your website.All the Best!

The next time I learn a blog, I hope that it doesnt disappoint me as much as this one. I mean, I know it was my choice to learn, however I truly thought youd have one thing fascinating to say. All I hear is a bunch of whining about one thing that you would repair in case you werent too busy on the lookout for attention.

Hi, Neat post. There’s an issue together with your web site in internet explorer, would check this?K IE still is the marketplace leader and a big component to people will miss your magnificent writing due to this problem.

Spot on with this write-up, I truly suppose this website needs much more consideration. I’ll probably be again to read much more, thanks for that info.

It’s best to participate in a contest for among the best blogs on the web. I will recommend this site!

you are really a good webmaster. The web site loading speed is incredible. It seems that you are doing any unique trick. Moreover, The contents are masterpiece. you have done a wonderful job on this topic!

I am really inspired along with your writing talents as well as with the format for your weblog. Is this a paid subject or did you customize it your self? Either way stay up the nice high quality writing, it’s rare to look a great weblog like this one these days..

I am extremely impressed with your writing skills and also with the layout on your blog. Is this a paid theme or did you customize it yourself? Anyway keep up the nice quality writing, it is rare to see a great blog like this one today..

It’s perfect time to make some plans for the longer term and it is time to be happy. I have learn this submit and if I may just I desire to suggest you few attention-grabbing things or advice. Maybe you could write subsequent articles relating to this article. I desire to read more issues approximately it!

You are my inhalation, I have few blogs and very sporadically run out from to brand.

USA Flights 24 — search engine helps you compare prices from hundreds of airlines and travel sites in seconds — so you can find cheap flights fast. Whether you’re planning a weekend getaway, a cross-country adventure, or an international vacation, we make it easy to fly for less.

It is in reality a nice and helpful piece of info. I?¦m happy that you simply shared this helpful information with us. Please stay us up to date like this. Thanks for sharing.

As soon as I noticed this internet site I went on reddit to share some of the love with them.

Some genuinely interesting points you have written.Helped me a lot, just what I was searching for : D.

I’m not sure exactly why but this website is loading very slow for me. Is anyone else having this problem or is it a issue on my end? I’ll check back later on and see if the problem still exists.

Hello there! Do you know if they make any plugins to safeguard against hackers? I’m kinda paranoid about losing everything I’ve worked hard on. Any tips?

Awsome website! I am loving it!! Will come back again. I am taking your feeds also

I genuinely enjoy looking through on this website, it has got great posts. “Don’t put too fine a point to your wit for fear it should get blunted.” by Miguel de Cervantes.

I’ve been surfing online more than 3 hours today, yet I never found any interesting article like yours. It’s pretty worth enough for me. In my view, if all web owners and bloggers made good content as you did, the internet will be much more useful than ever before.

Merely wanna input on few general things, The website layout is perfect, the articles is real superb. “The reason there are two senators for each state is so that one can be the designated driver.” by Jay Leno.

I’ve recently started a web site, the info you offer on this site has helped me greatly. Thank you for all of your time & work.

I’d constantly want to be update on new blog posts on this site, bookmarked! .

Thanks for sharing superb informations. Your web site is very cool. I am impressed by the details that you have on this web site. It reveals how nicely you understand this subject. Bookmarked this website page, will come back for more articles. You, my pal, ROCK! I found simply the info I already searched everywhere and simply couldn’t come across. What an ideal website.

I as well as my friends ended up examining the good tricks found on the blog and so unexpectedly developed an awful suspicion I never expressed respect to the web site owner for those tips. My ladies had been consequently very interested to see all of them and have in effect seriously been loving these things. Appreciate your turning out to be quite accommodating and also for picking such perfect subject areas most people are really wanting to understand about. Our own sincere apologies for not saying thanks to you earlier.

I went over this site and I think you have a lot of fantastic information, saved to fav (:.

Hello there, just became alert to your blog through Google, and found that it is really informative. I am gonna watch out for brussels. I’ll appreciate if you continue this in future. Many people will be benefited from your writing. Cheers!

I like what you guys are up too. Such intelligent work and reporting! Keep up the superb works guys I’ve incorporated you guys to my blogroll. I think it will improve the value of my website :).

whoah this blog is excellent i really like reading your posts. Keep up the good work! You recognize, a lot of individuals are looking round for this information, you could help them greatly.

I have been absent for some time, but now I remember why I used to love this website. Thanks , I¦ll try and check back more frequently. How frequently you update your web site?

What i do not realize is actually how you are not actually much more well-liked than you might be right now. You are so intelligent. You realize therefore significantly relating to this subject, produced me personally consider it from numerous varied angles. Its like men and women aren’t fascinated unless it is one thing to accomplish with Lady gaga! Your own stuffs nice. Always maintain it up!

Thank you for sharing superb informations. Your web site is very cool. I am impressed by the details that you¦ve on this website. It reveals how nicely you understand this subject. Bookmarked this web page, will come back for extra articles. You, my pal, ROCK! I found simply the info I already searched all over the place and just could not come across. What a great web site.

Good day! I could have sworn I’ve been to this site before but after checking through some of the post I realized it’s new to me. Anyways, I’m definitely glad I found it and I’ll be bookmarking and checking back frequently!

As a Newbie, I am constantly browsing online for articles that can benefit me. Thank you

Thank you for some other informative web site. Where else may just I get that type of information written in such an ideal manner? I’ve a challenge that I am just now operating on, and I’ve been on the glance out for such info.

Wow, marvelous weblog layout! How long have you ever been blogging for? you made blogging look easy. The total glance of your web site is great, as neatly as the content!

Great line up. We will be linking to this great article on our site. Keep up the good writing.

Greetings from Florida! I’m bored at work so I decided to check out your site on my iphone during lunch break. I enjoy the knowledge you provide here and can’t wait to take a look when I get home. I’m surprised at how fast your blog loaded on my phone .. I’m not even using WIFI, just 3G .. Anyways, very good blog!

Gotta say, ket qua 9.net is super reliable. All you need for finding the latest results. You won’t regret it. Go check it out: ket qua 9.net

Honestly, ket qua.net 9 is the only place I trust for my lottery results. Their site is always up-to-date, no fluff just straight to the facts! Take a look: ket qua.net 9

I love your blog.. very nice colors & theme. Did you create this website yourself? Plz reply back as I’m looking to create my own blog and would like to know wheere u got this from. thanks

Thank you for the auspicious writeup. It in reality was a leisure account it. Look complex to more delivered agreeable from you! However, how could we communicate?

I’m really impressed with your writing skills as well as with the layout on your weblog. Is this a paid theme or did you modify it yourself? Anyway keep up the nice quality writing, it is rare to see a nice blog like this one nowadays..

An impressive share, I just given this onto a colleague who was doing a little analysis on this. And he in fact bought me breakfast because I found it for him.. smile. So let me reword that: Thnx for the treat! But yeah Thnkx for spending the time to discuss this, I feel strongly about it and love reading more on this topic. If possible, as you become expertise, would you mind updating your blog with more details? It is highly helpful for me. Big thumb up for this blog post!

With havin so much written content do you ever run into any issues of plagorism or copyright infringement? My blog has a lot of completely unique content I’ve either created myself or outsourced but it seems a lot of it is popping it up all over the internet without my permission. Do you know any solutions to help prevent content from being stolen? I’d definitely appreciate it.

You are my aspiration, I own few web logs and rarely run out from to post .

Hey very nice blog!! Man .. Excellent .. Amazing .. I’ll bookmark your blog and take the feeds also…I’m happy to find numerous useful info here in the post, we need work out more strategies in this regard, thanks for sharing. . . . . .

F*ckin¦ awesome things here. I¦m very glad to see your article. Thanks a lot and i’m looking forward to touch you. Will you please drop me a e-mail?

I was suggested this website by my cousin. I am not sure whether this post is written by him as no one else know such detailed about my problem. You’re wonderful! Thanks!

I am often to blogging and i really appreciate your content. The article has really peaks my interest. I am going to bookmark your site and keep checking for new information.

Keep functioning ,terrific job!

Hello there, simply become alert to your blog through Google, and located that it is truly informative. I’m going to be careful for brussels. I’ll appreciate in the event you continue this in future. A lot of other folks might be benefited out of your writing. Cheers!

You have remarked very interesting details ! ps nice internet site.

Hello there, just became alert to your blog through Google, and found that it’s truly informative. I’m gonna watch out for brussels. I’ll be grateful if you continue this in future. A lot of people will be benefited from your writing. Cheers!

I am always invstigating online for ideas that can benefit me. Thanks!

I have been absent for a while, but now I remember why I used to love this site. Thank you, I¦ll try and check back more frequently. How frequently you update your site?

Keep functioning ,fantastic job!

What¦s Taking place i’m new to this, I stumbled upon this I’ve discovered It absolutely useful and it has aided me out loads. I am hoping to give a contribution & aid different users like its aided me. Great job.

Today, I went to the beachfront with my kids. I found a sea shell and gave it to my 4 year old daughter and said “You can hear the ocean if you put this to your ear.” She placed the shell to her ear and screamed. There was a hermit crab inside and it pinched her ear. She never wants to go back! LoL I know this is entirely off topic but I had to tell someone!

Woh I love your content, saved to my bookmarks! .

I like this blog very much so much superb information.

he blog was how do i say it… relevant, finally something that helped me. Thanks

Yo, gave Fun120 a shot. Pretty decent selection of games, and the site’s slick. Honestly, nothing majorly stands out, but it’s a solid option. If you’re bored, give it a whirl. fun120

Gave BJ888game a spin the other night. Found some cool slots I hadn’t seen before. The bonuses were decent too. Nothing to hate, really. Give it a shot if you are looking for different games. bj888game

Downloaded Verdecasinoapp last week. Mobile app plays smooth! Easy-to-use app and good slot games. I reckon you should check it out. verdecasinoapp

Hi would you mind stating which blog platform you’re working with? I’m going to start my own blog soon but I’m having a difficult time making a decision between BlogEngine/Wordpress/B2evolution and Drupal. The reason I ask is because your design seems different then most blogs and I’m looking for something completely unique. P.S My apologies for getting off-topic but I had to ask!

I was very happy to find this net-site.I needed to thanks on your time for this wonderful learn!! I positively enjoying every little little bit of it and I’ve you bookmarked to check out new stuff you weblog post.

Absolutely written subject matter, thankyou for entropy.

I have recently started a website, the info you offer on this website has helped me tremendously. Thanks for all of your time & work. “So full of artless jealousy is guilt, It spills itself in fearing to be spilt.” by William Shakespeare.

You should take part in a contest for one of the best blogs on the web. I will recommend this site!

bingoplus app is a search term used to describe the mobile application version of the Bingo Plus gaming platform.

Hi! I’ve been following your weblog for some time now and finally got the bravery to go ahead and give you a shout out from Houston Tx! Just wanted to say keep up the fantastic job!

Hi, Neat post. There is a problem with your site in internet explorer, may check this… IE nonetheless is the market chief and a good component of people will omit your magnificent writing because of this problem.

Utterly written content, Really enjoyed reading through.

Good post. I be taught something more difficult on completely different blogs everyday. It’ll at all times be stimulating to learn content from other writers and follow slightly one thing from their store. I’d desire to use some with the content on my blog whether or not you don’t mind. Natually I’ll give you a hyperlink in your web blog. Thanks for sharing.

You must participate in a contest for the most effective blogs on the web. I’ll suggest this website!

Utterly pent written content, Really enjoyed examining.

so much good info on here, : D.

I like the efforts you have put in this, appreciate it for all the great posts.

Just wish to say your article is as surprising. The clarity to your submit is simply excellent and i could think you’re a professional in this subject. Fine with your permission let me to grasp your feed to stay updated with drawing close post. Thanks 1,000,000 and please carry on the rewarding work.

Thanks for every other informative blog. The place else could I get that type of info written in such an ideal manner? I’ve a venture that I’m just now operating on, and I have been on the glance out for such info.

Real fantastic information can be found on blog. “You don’t get harmony when everybody sings the same note.” by Doug Floyd.

You actually make it seem so easy with your presentation however I in finding this topic to be actually something which I feel I would by no means understand. It seems too complex and very wide for me. I am having a look ahead on your next submit, I will attempt to get the hold of it!

Good day! Do you know if they make any plugins to safeguard against hackers? I’m kinda paranoid about losing everything I’ve worked hard on. Any recommendations?

I believe this website has some very good information for everyone :D. “The public will believe anything, so long as it is not founded on truth.” by Edith Sitwell.

Just what I was looking for, thanks for posting.

Would love to forever get updated great web site! .

I have been exploring for a bit for any high-quality articles or blog posts on this kind of area . Exploring in Yahoo I at last stumbled upon this site. Reading this information So i’m happy to convey that I’ve a very good uncanny feeling I discovered just what I needed. I most certainly will make sure to don’t forget this site and give it a glance regularly.

Good post. I learn something more difficult on different blogs everyday. It can all the time be stimulating to learn content material from different writers and observe just a little one thing from their store. I’d desire to use some with the content material on my blog whether or not you don’t mind. Natually I’ll give you a link on your web blog. Thanks for sharing.

Whats up very nice site!! Guy .. Beautiful .. Amazing .. I will bookmark your web site and take the feeds also?KI am happy to seek out numerous useful info right here within the put up, we need work out more strategies in this regard, thank you for sharing. . . . . .

I love the efforts you have put in this, thank you for all the great blog posts.

There may be noticeably a bundle to know about this. I assume you made sure nice factors in options also.

Keep up the good work, I read few blog posts on this internet site and I conceive that your blog is really interesting and has got circles of good information.

Your place is valueble for me. Thanks!…

Appreciate it for this wondrous post, I am glad I discovered this site on yahoo.

Heya i am for the first time here. I came across this board and I find It really useful & it helped me out a lot. I hope to give something back and aid others like you aided me.

Valuable info. Lucky me I found your web site by accident, and I’m shocked why this accident did not happened earlier! I bookmarked it.

I was recommended this web site by my cousin. I’m not sure whether this post is written by him as nobody else know such detailed about my difficulty. You’re incredible! Thanks!

I respect your piece of work, thankyou for all the interesting posts.

Valuable information. Lucky me I found your website by accident, and I’m shocked why this accident didn’t happened earlier! I bookmarked it.

I went over this website and I think you have a lot of good information, saved to favorites (:.

Nice blog here! Also your site loads up fast! What web host are you using? Can I get your affiliate link to your host? I wish my website loaded up as fast as yours lol

I truly treasure your work, Great post.